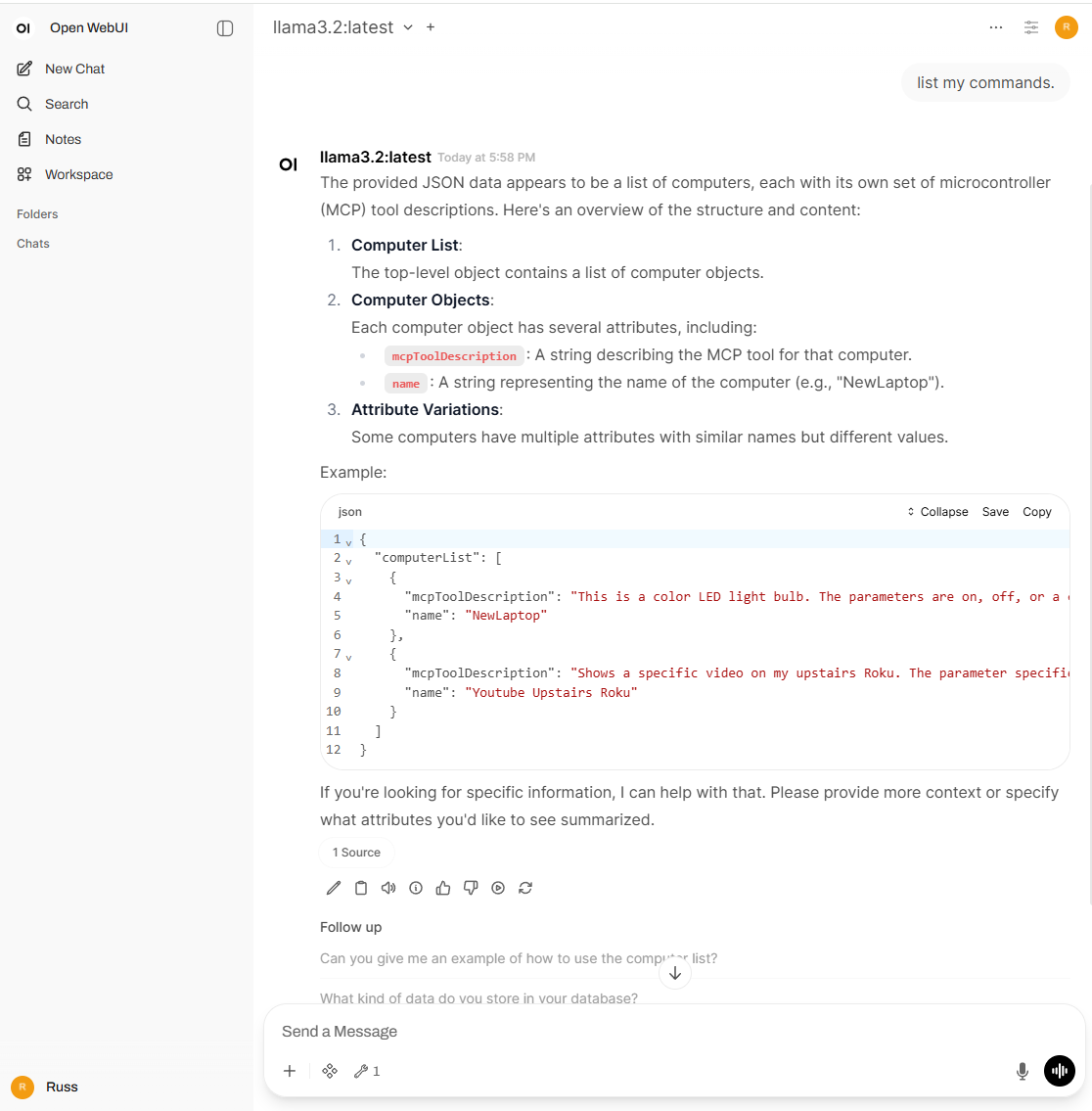

I used the local TRIGGERcmd stdio mcp server with a local LLM with Ollama and Open WebUI

-

I used this bash script to run an MCP/OpenAI proxy server in Ubuntu under WSL:

#!/bin/bash -xv curl -O https://agents.triggercmd.com/triggercmd-mcp/triggercmd-mcp-linux-amd64 chmod +x triggercmd-mcp-linux-amd64 docker build -t mcp-proxy-server . docker run -it -p 8000:8000 -e TRIGGERCMD_TOKEN="my triggercmd token" mcp-proxy-serverThis is my Dockerfile:

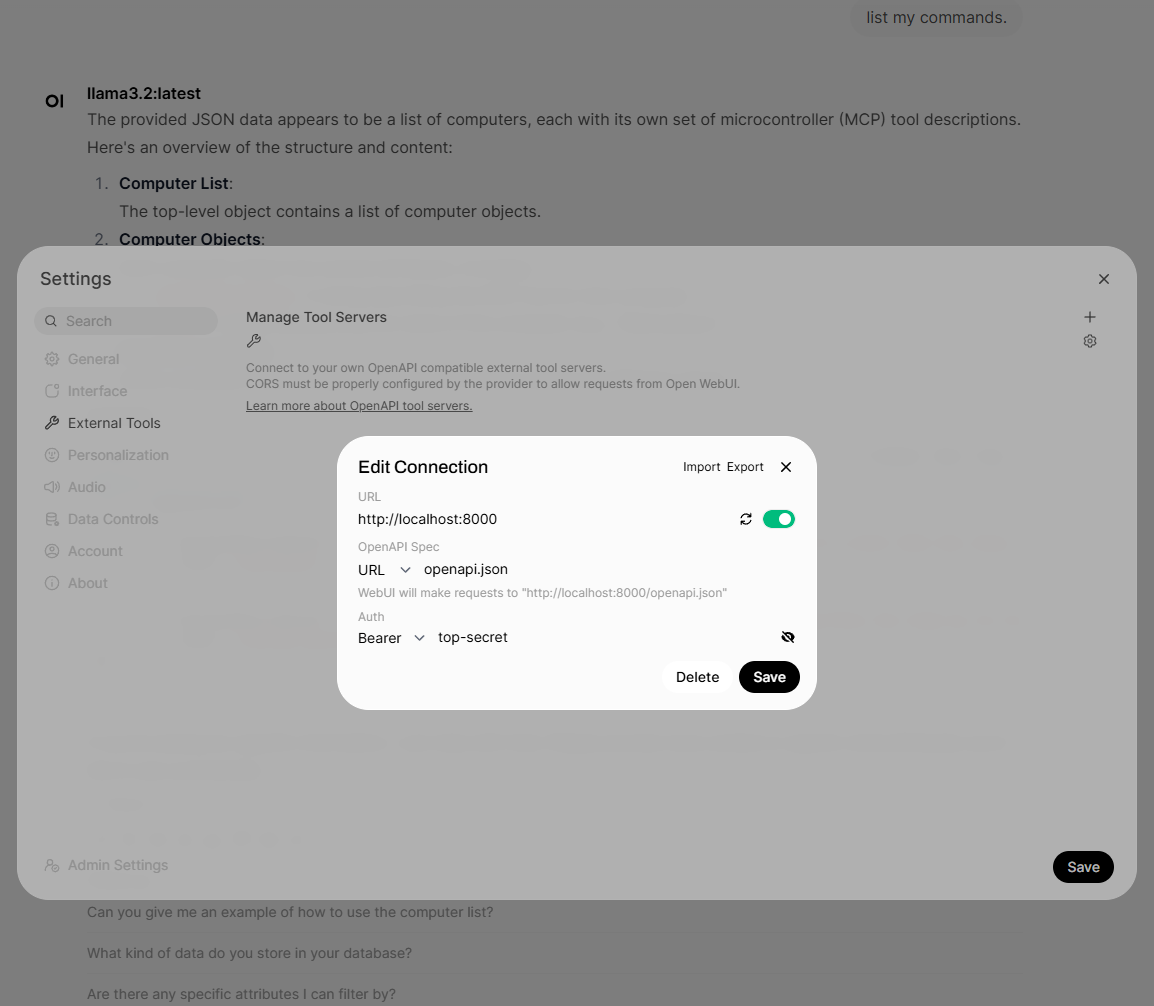

FROM python:3.11-slim WORKDIR /app RUN pip install mcpo uv COPY triggercmd-mcp-linux-amd64 /triggercmd-mcp-linux-amd64 # Replace with your MCP server command; example: uvx mcp-server-time CMD ["uvx", "mcpo", "--host", "0.0.0.0", "--port", "8000", "--api-key", "top-secret", "--", "/triggercmd-mcp-linux-amd64" ]This is how you set it up in the Settings - External Tools - Manage Tool Servers: